In Top Data Science AI Tech Blog Series Kai Lehtinen, COO, is discussing with Juho Piironen, Lead Data Scientist, PhD, on the power and benefits of combining different machine learning models in a modular manner to solve various customer use cases. Using a modular approach sometimes allows for solving business problems in a more flexible and scalable manner.

Kai: Juho, could you share with us what is the core of the combining models approach?

Juho: Sure. In basic terms, by combining models in this context we mean that the output of one model goes as an input to another model. The chain of models might contain multiple models depending on the complexity of the business problem. Together the submodels form one big model, which provides the desired output from the original input. The core idea is that even though one could consider building a model directly from the original input to the final output, breaking the model into smaller submodels can in some cases be very handy and useful.

Kai: What are the typical benefits of using this approach?

Juho: In general the modularity of the combining models approach brings many advantages. These include for example

- When causal learning is needed, making the submodel structuring reflect the causal relationships between the different variables is a natural choice (in causal learning literature, this is known as a structural causal model).

- Breaking the model into smaller pieces can also become handy in other situations, for example, if collecting data for the big model or training is otherwise difficult or awkward.

- Training, retraining, and maintenance of the smaller submodels can be much more convenient and efficient than that for one big model.

Kai: Well, that makes a lot of sense. Could you elaborate a bit on how you decided to study this approach for the first time?

Juho: I’ve spent quite some time working on several customer projects that focus on model predictive control. This is a two stage approach: first, we learn a model to predict some output variable of interest (say product quality) based on some controllable variables. We then find optimal values for these controllable variables, such that the prediction for the output variable matches to a desired value. This requires learning a causal model, and hence structuring the model to reflect the causal structure of the data is a natural choice.

My experience from this domain has been very positive, which has resulted in considering using multiple submodels also in completely other domains which do not involve causal learning at all.

Kai: Could you share with us an example use case where this approach has proven to be handy?

Juho: Sure. This is quite a cool one being from a Casino environment. It’s a rather simple but great example of how the modularity of the approach can provide significant benefits. The particular use case – which was designed in close collaboration with my colleague Emma Meeus – is a computer vision application that counts casino chips of various colours from an image.

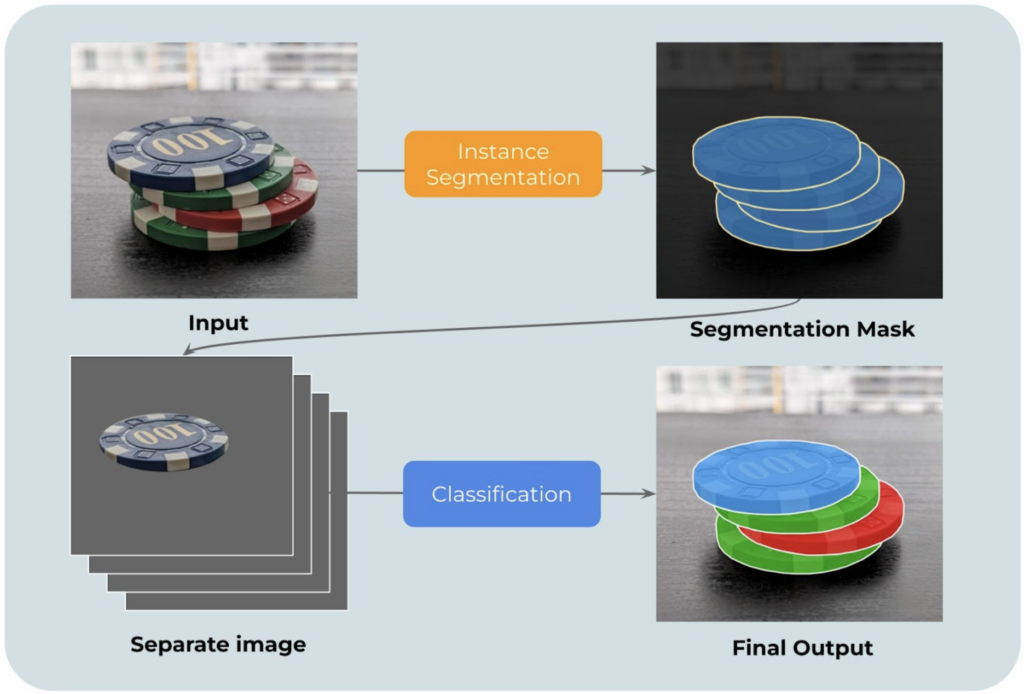

This could be solved, for example, by training one instance segmentation model that segments chips from the image, and then classifies them based on their colour. This would be the traditional out-of-the-box approach. However, if we want to use such a model for several different casinos, each of which has a different chipset (that is, colours of the chips are different), we would either need to:

- Train separate models for each casino. This means collecting and annotating separate training data for each casino, which takes a lot of work. This is also very inefficient since we cannot use data from the previous casinos to train the model for the next one, because the chipsets are different.

- Use the same model for each casino. This is more efficient since we can use the data from all the casinos to train the model, but the downside is that we need to extend the number of classes (that is, number of chip types) whenever we get data from a new casino. This is rather awkward, because there is no upper limit for the number of different chip types, and it also causes trouble, for example, if two casinos have some chips that look very much alike.

The problem is easily solved simply by using a separate model for segmentation and classification.

- The segmentation model is trained to simply find pixel masks that correspond to chips, no matter what their colour. We can use all the available data (from all casinos) to train the segmentation model, and we can readily apply the model to new casinos typically without any extra training. The model generalises well to new casinos because chips always look more or less the same by their shape, even if the colours are different.

- The classifier is trained separately for each casino. But this is rather trivial, because collecting classification data is easy; all it takes is to take a few pictures where only one chip type is present at a time, and then use the segmentation model to extract training examples for the classifier (for each chip type).

With the two model approach, the data use is very efficient, and the effort needed per each new casino is minimal.

Kai: Well. that is an excellent example of the power of this approach. It gives flexibility, scalability and also minimises the data related tasks without jeopardising the robustness of the solution. Great stuff. How advanced or bleeding edge is this technology?

Juho: This is certainly nothing new. But I’ve seen a lot of this attitude where you set up one giant model, and just throw in all the data hoping it will work, without thinking about it so much. In many cases dividing the problem into smaller pieces might be very beneficial.

Kai: Got it. To conclude, when should one consider using this approach?

Juho: I mentioned the causal inference problems, but this can come up handy in virtually any problem. The most important thing is to think about model training and application in a holistic way: What data are available and what is needed to train the model, how does the training scale when you need to train/apply the same model to another similar case, how could you utilise all the data you have as efficiently as possible, etc. Sometimes it is beneficial to use several models instead of just one (like separate segmentation and classification models). This is not something that is so often discussed in the machine learning courses in a university, but it often comes in handy in applied work.

Authors:

Juho Piironen, Lead Data Scientist, PhD

Kai Lehtinen, COO, Msc.